David D. BaekI am a PhD student at MIT EECS, where I am fortunate to be advised by Prof. Max Tegmark in the Tegmark AI Safety Group. I am passionate about leveraging mathematical analysis, machine learning, and algorithm design to solve challenging real-world problems! Email / CV / Google Scholar / LinkedIn / Twitter |  |

ResearchMy research interest revolves around representation learning, LLM interpretability, and AI alignment. Modern machine learning models often operate like a black-box: my goal is to understand the mechanism that enabled the success of these large-scale models, thereby making them more interpretable and aligned with human's goals. |

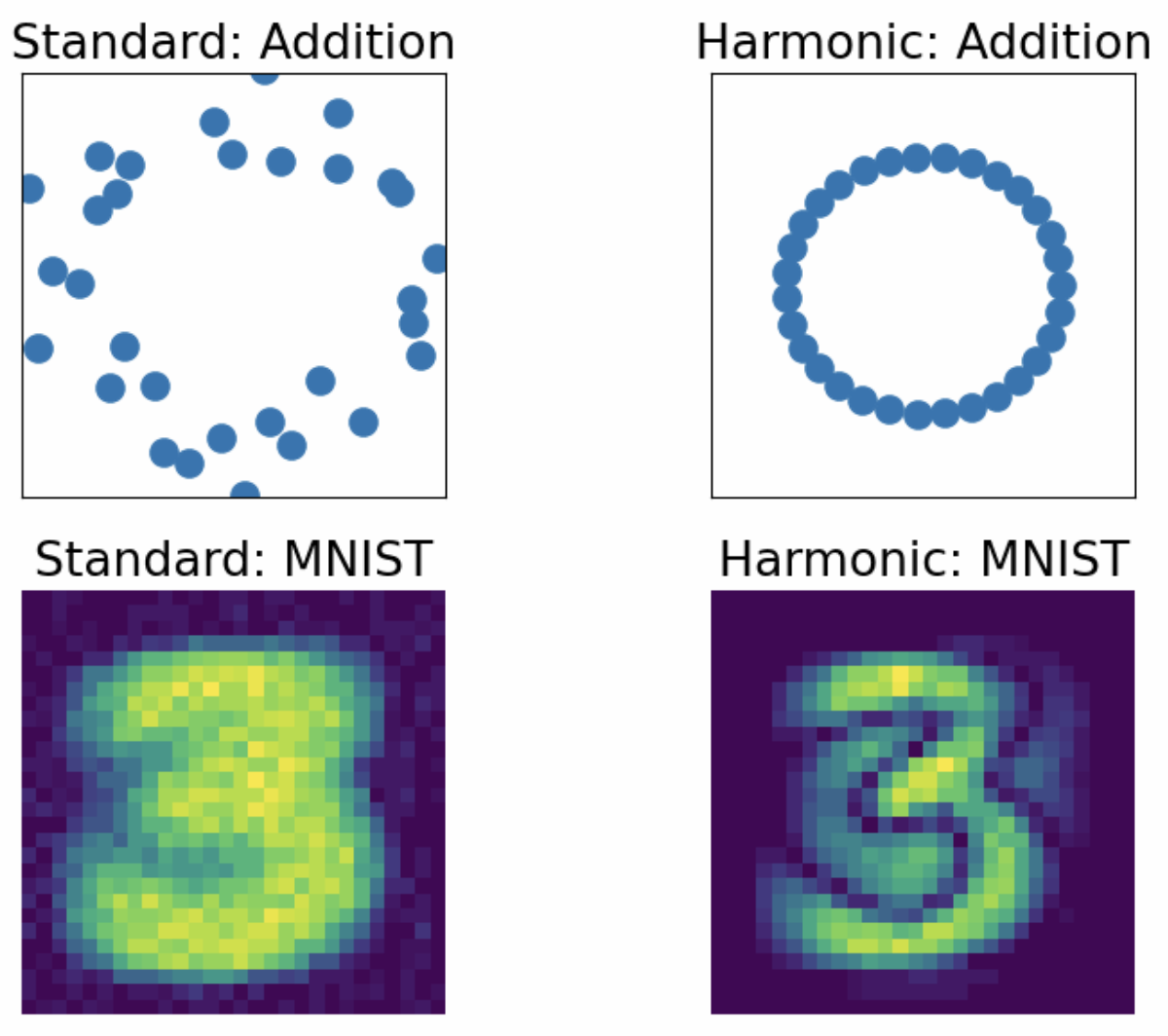

| Harmonic Loss Trains Interpretable AI ModelsDavid D. Baek*, Ziming Liu*, Riya Tyagi, Max Tegmark arXiv, 2025 arxiv / code / twitter We introduce harmonic loss as alternative to the standard CE loss for training neural networks and LLMs. The post about the paper has received 1.1 million views on twitter. |

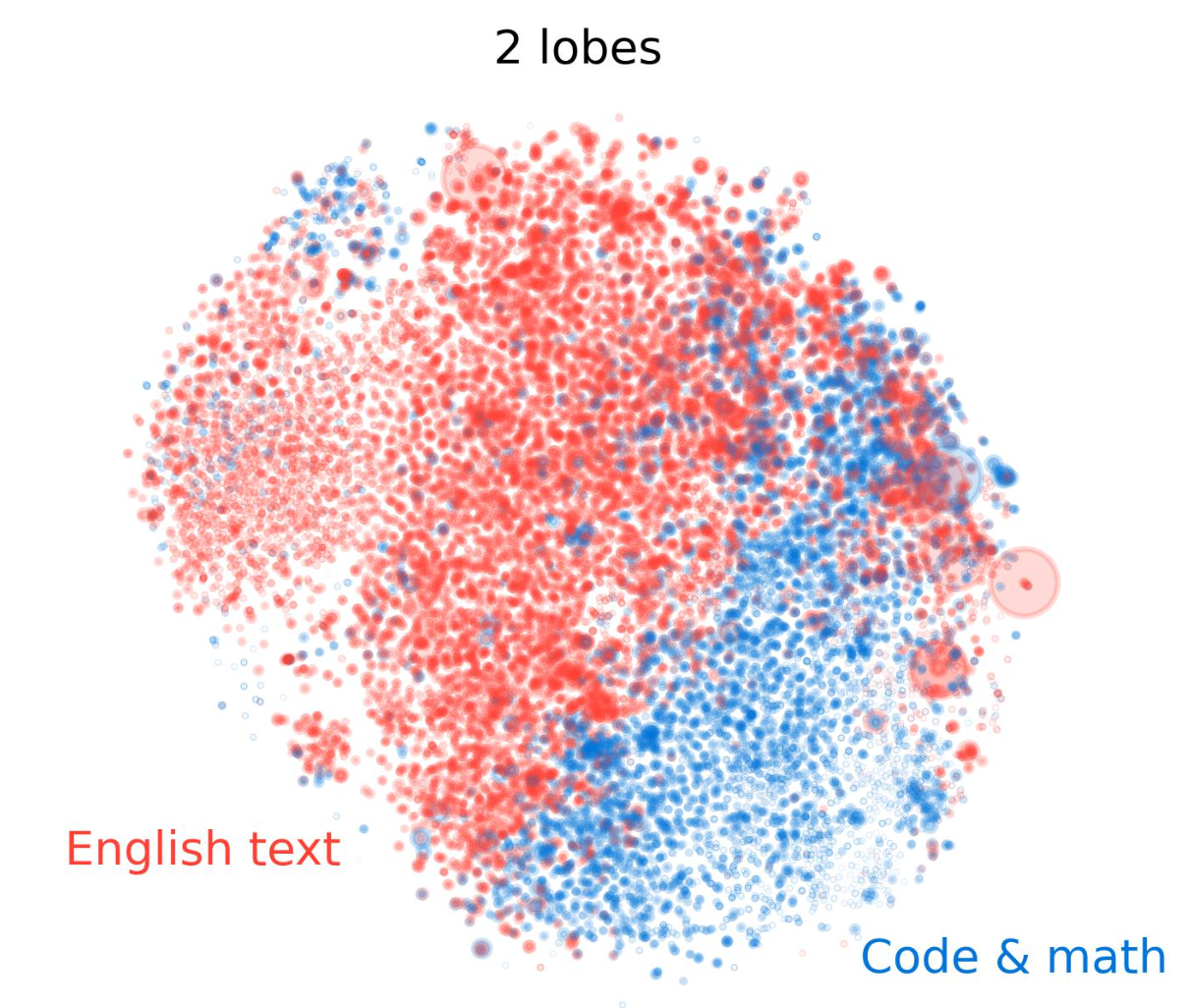

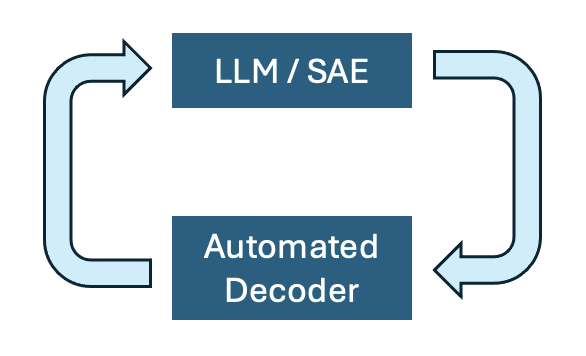

| The Geometry of Concepts: Sparse Autoencoder Feature StructureDavid D. Baek*, Yuxiao Li*, Eric J. Michaud*, Joshua Engels, Xiaoqing Sun, Max Tegmark arXiv, 2024 arxiv / code / twitter We study geometrical structure of Sparse Autoencoder feature vectors at three scales: (a) “atomic” small-scale, (b) “brain” intermediate-scale, and (c) “galaxy” large-scale. The post about the paper has received 300K views on twitter. |

| Generalization from Starvation: Hints of Universality in LLM Knowledge Graph LearningDavid D. Baek, Yuxiao Li, Max Tegmark arXiv, 2024 arxiv We study geometrical structure of knowledge representations in Large Language Models (LLMs), and examine hints of representation universality. |

| GenEFT: Understanding Statics and Dynamics of Model Generalization via Effective TheoryDavid D. Baek, Ziming Liu, Max Tegmark ICLR workshop on Bridging the Gap between Practice and Theory in Machine Learning, 2024 arxiv We present GenEFT: an effective theory framework for shedding light on the statics and dynamics of neural network generalization, and illustrate it with graph learning examples. |

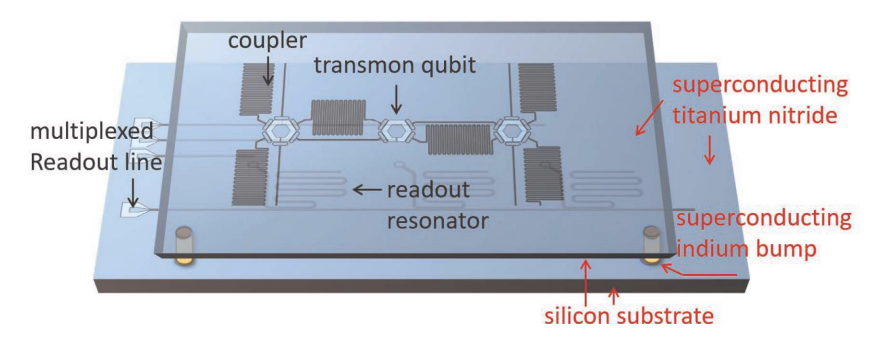

| Design of scalable superconducting quantum circuits using flip-chip assemblySeong Hyeon Park, David D. Baek, Insung Park, Seungyong Hahn IEEE Transactions on Applied Conductivity, 2022 arxiv We present a method for designing highly scalable superconducting quantum circuits using flip-chip assembly and multi-path coupling strategy. |

Class Projects |

| Filtering Data to Improve NeRF for Driving ScenesShiva Sreeram, David D. Baek Advances in Computer Vision, 2024 arxiv We propose a filter-based data-preprocessing strategy for NeRF that enables autonomous driving scene reconstruction with reduced compute time and memory, with the minimal performance loss possible. |

| Automated System for effectively managing leaves of Korean soldiersGeonyoung Park, David D. Baek Korean Military Open Source Online Hackathon, 2020 We built a web application that automatically manages the leaves of Korean soldiers, using Node.js, Express, MongoDB, Passport.js for backend and Vue, Vuetify and Chart.js for the frontend. |

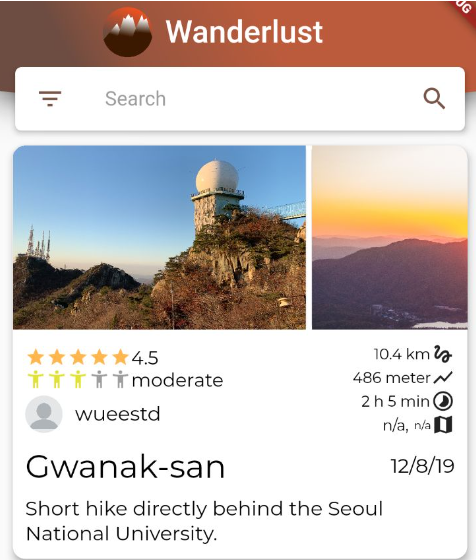

| Wanderlust: Community for HikersAndré Leon Poncelet, David D. Baek, Dimitri Wuest, Emil Johansson, Jonas Mobile Computing and its Applications, 2019 We built a mobile application which serves as both a guide and a community to hikers all over the world. We used Flutter, Google Maps, and Google Firebase to build our application. |

| Design and source code from Leonid Keselman's Jekyll fork of Jon Barron's website. |